Earlier this month, Google made a diffused exchange of their search Console that many omitted: they changed the max quotas for the number of times you may manually request Google to crawl a URL. formerly, you can request that Google crawl a particular URL 500 instances in line with 30-day duration. you may additionally request that Google crawl a particular URL and all of its immediately connected pages 10 times in a 3-day length. the new limits say that you may request a particular URL 10 instances per day and a selected URL and the pages it hyperlinks to immediately 2 instances consistent with day.

For many marketers, this variation will now not cause too much issue because they do now not submit URLs besides after they update the page and few people would accomplish that above the max frequency. Webleads usually keeps customers apprised of enterprise adjustments and this one gives us a hazard to reiterate the significance of the fundamentals of web site hygiene, taxonomy, internal links, robots.text, and sitemaps and the way they influence the crawl,budget, and indexation of site pages.

Know About Crawl management & why is crawl management so significant?

Crawl control describes SEOs’ efforts to control how serps crawl their web sites, such as the pages they study and the way they navigate the internet site.

Search engine spiders can enter and exit a website in any order. they may land on your website via a random product web page and go out via your homepage. as soon as the spider has landed to your web page, but, you need to make certain that it can navigate your area without problems. The way your site hyperlinks together will direct the spider to new pages. it's going to additionally tell the spider how the site is prepared. This helps it decide in which your emblem’s expertise lies.

The crawling of your web page remains crucial to how your pages rank at the SERP. The spider logs the data approximately your web page, a good way to be used to assist determine your rankings. once you replace a particular web page, the new web page will now not impact your ratings until Google returns to crawl it once more.

crawl management can also manage your recognition with Google. thru techniques like configuring your robots.txt record, you can preserve spiders far from sure content material, which include duplicate content material, and keep away from the associated penalties.

Google uses a crawl finances to determine how an awful lot of your content material to crawl and when. in line with Google’s Gary Illyes, however, crawl budget must not be a primary priority for the general public of web sites. For web sites that have large numbers of pages, it is probably more of a attention.

Illyes reviews that Google determines crawl budget based on essential elements:

- How often and how speedy the Google spider can crawl your website without hurting your server. If the spider detects that crawling your site slowed it down for users, they will crawl less regularly.

- The demand in your content, along with the recognition of your website in addition to the freshness of the rest of the content material on the topic.

site owners with heaps of pages, consequently, will want to cautiously consider their website online’s ability to address the crawls, their website online speed, and the freshness in their content to reinforce their crawl budget.

How do I successfully curb crawl management?

a hit crwal management calls for constructing a domain that is user and bot friendly, while also cautiously protecting pages you do no longer want the bot to discover. bear in mind the website online shape earlier than you layout or redesign a internet site. The taxonomy you choose will determine how you categorize and hyperlink posts.

Linking posts in a useful way will assist your readers and the bot understand your structure. recall while growing your links that you need to attention on humans first and bots 2d–the principle priority need to be presenting price and now not just looking to make the bot satisfied.

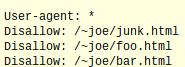

As you construct your website you could also find it beneficial to mark positive pages as “Disallow” within robots.txt to indicate which you do now not want search engines like google and yahoo to crawl a specific page. this will be for a variety of motives, such as duplicate content material on the web page or a web page that you currently are constructing and do not need it included simply but. these instructions will can help you direct the hunt engine spiders far from certain textual content, shielding the popularity of your site.

crawl management could not be entire with out additionally discussing your sitemap. Google recommends that when building a sitemap, you cautiously glance through each page and decide the canonical model of it to help avoid any replica content material consequences. you may then post your sitemap to Google to tell them the way to crawl your web site. it will assist make sure that Google can find all your content material, even if your efforts to construct hyperlinks all through your website have now not been specifically robust.

Linking posts in a useful way will assist your readers and the bot understand your structure. recall while growing your links that you need to attention on humans first and bots 2d–the principle priority need to be presenting price and now not just looking to make the bot satisfied.

As you construct your website you could also find it beneficial to mark positive pages as “Disallow” within robots.txt to indicate which you do now not want search engines like google and yahoo to crawl a specific page. this will be for a variety of motives, such as duplicate content material on the web page or a web page that you currently are constructing and do not need it included simply but. these instructions will can help you direct the hunt engine spiders far from certain textual content, shielding the popularity of your site.

crawl management could not be entire with out additionally discussing your sitemap. Google recommends that when building a sitemap, you cautiously glance through each page and decide the canonical model of it to help avoid any replica content material consequences. you may then post your sitemap to Google to tell them the way to crawl your web site. it will assist make sure that Google can find all your content material, even if your efforts to construct hyperlinks all through your website have now not been specifically robust.

What you want to realize about the changes to the crawl limits?

- Google has modified the quotas for the wide variety of URLs you could manually request Google crawls.

- Google has made those modifications to inspire human beings to create evidently crawlable sites so they do not want to manually request each URL to be crawled.

- The usage of crwal management excellent practices which includes; high-quality site business or taxonomy, linking inside the web site, and the use of robots.txt, permit you to encourage crawling and ensure that the web page earns a superb reputation with the bots and with customers.

- Creating a sitemap will assist you inform Google of your web page business enterprise and priorities.

Google maintains to push site owners towards satisfactory web page construction. They regularly accomplish that with their algorithm updates that punish bad first-class content and reward the precious. this modification with the crawl limits in all likelihood has similar intentions. brands that concentrate on web page organisation and prioritize consumer-pleasant layouts will locate that this modification impacts them minimally. in case you previously used this crwal feature on a ordinary foundation, Google needs you to understand that the time has come so that it will redecorate your web site.

for further information about seo then visit here:-

Best Seo Service in Delhi : http://www.webleads.online/seo-services.html

There are a few ways to increase your blog's standing in search engines. Having a correct and descriptive title definitely helps. If you want to increase your business online promotion with our SEO Service.

ReplyDeletebest seo service company in delhi

best seo services company in delhi

cheap seo service company in delhi

cheap seo services company in delhi

top seo service company in delhi

top seo services company in delhi

seo company in delhi

best seo company in delhi

cheap seo company in delhi

top seo company in delhi